记录一次linux中hadoop,伪分布式hdfs,hbase,mongodb,redis的搭建与安装

记录一次linux中hadoop,伪分布式hdfs,hbase,mongodb,redis的搭建与安装

建议新建一个hadoop用户,所有操作在hadoop用户的home目录下进行,可以省去许多权限问题

例如

jdk8放在/home/hadoop/hadoop/jdk8

hadoop放在/home/hadoop/hadoop/hadoop

hbase放在/home/hadoop/hadoop/hbase

基础配置(选做)

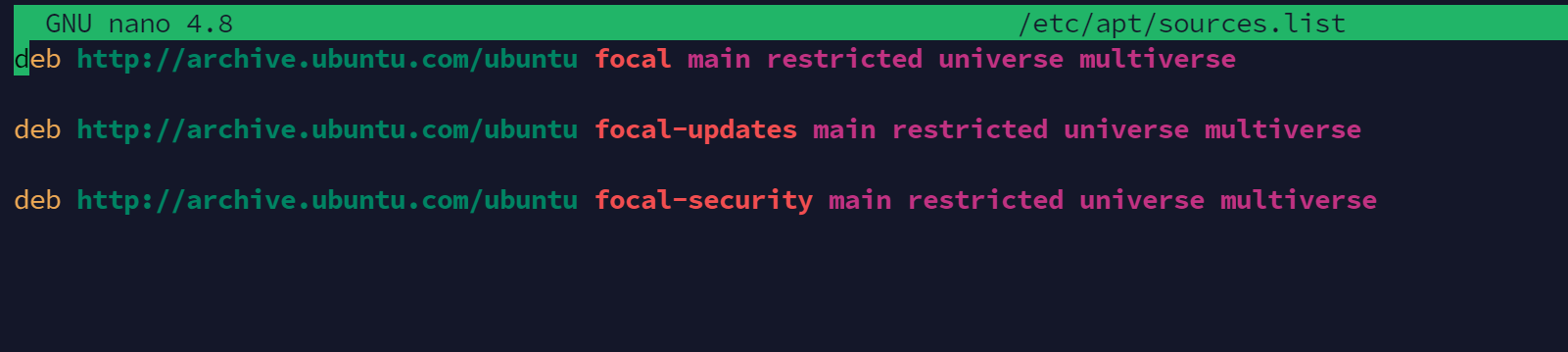

换源

用下面的源替换/etc/apt/sources.list中的内容

sudo nano /etc/apt/sources.list

按ctrl+k可以删除整行

deb http://mirrors.ustc.edu.cn/ubuntu/ focal main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ focal main restricted universe multiverse

deb http://mirrors.ustc.edu.cn/ubuntu/ focal-security main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ focal-security main restricted universe multiverse

deb http://mirrors.ustc.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

deb http://mirrors.ustc.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

deb-src http://mirrors.ustc.edu.cn/ubuntu/ focal-backports main restricted universe multiverse初始截图

换完之后

换完之后

安装openssh-server

安装openssh-server

sudo apt install openssh-server

设置无密码ssh链接

先链接本地初始化文件

ssh localhost

按照提示输出密码与yes

完成后输入exit退出

进入~.ssh/生成密钥

hadoop@hadoop:~$ cd ~/.ssh/

hadoop@hadoop:~/.ssh$

hadoop@hadoop:~/.ssh$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:heMi8YGz/t+k+VTe3wGO+CTTKTfn6CEhLJABCAw0GnY hadoop@hadoop

The key's randomart image is:

+---[RSA 3072]----+

|X=.E |

|+oo o. . |

|. o+ . o . |

| .=.o o |

| o.ooS. .. |

| . .... +o+.. |

| . *oO.o.. |

| . *O * .o|

| ..+.++ . o|

+----[SHA256]-----+

hadoop@hadoop:~/.ssh$ cat ./id_rsa.pub >> ./authorized_keys再次链接本地

ssh localhost

不需要输入密码证明设置成功

下载所需文件

文件下载地址

https://vip.123pan.cn/1815661672/%E7%9B%B4%E9%93%BE/hadoop/hadoop.zip

有可能需要安装unzip

sudo apt install unzip

解压到hadoop的home目录下

hadoop@hadoop:~$ pwd

/home/hadoop

hadoop@hadoop:~$ ls hadoop/

hadoop hbase jdk8所有配置文件已经配置完成,只需设置环境变量然后启动即可

环境变量的设置

赋予执行权限

chmod +x /home/hadoop/hadoop/hadoop/bin/*

chmod +x /home/hadoop/hadoop/hadoop/sbin/*

chmod +x /home/hadoop/hadoop/hbase/bin/*

chmod +x /home/hadoop/hadoop/jdk8/bin/*修改~/.bashrc文件,在最后增加

export JAVA_HOME=/home/hadoop/hadoop/jdk8

export HADOOP_HOME=/home/hadoop/hadoop/hadoop

export HBASE_HOME=/home/hadoop/hadoop/hbase

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

export PATH=$JAVA_HOME/bin:$HBASE_HOME/bin:$PATH执行source ~/.bashrc 激活

验证是否正常

hadoop@hadoop:~$ javac -version

javac 1.8.0_401

hadoop@hadoop:~$ hadoop version

Hadoop 2.9.2

Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 826afbeae31ca687bc2f8471dc841b66ed2c6704

Compiled by ajisaka on 2018-11-13T12:42Z

Compiled with protoc 2.5.0

From source with checksum 3a9939967262218aa556c684d107985

This command was run using /home/hadoop/hadoop/hadoop/share/hadoop/common/hadoop-common-2.9.2.jar

hadoop@hadoop:~$ hbase version

HBase 1.5.0

Source code repository git://apurtell-ltm4.internal.salesforce.com/Users/apurtell/src/hbase revision=d14e335edc9c22c30827bc75e73b5303ca64ee0d

Compiled by apurtell on Tue Oct 8 17:38:49 PDT 2019

From source with checksum ddbcb73f8120b32e294fb7d4190d56c4启动

先初始化

hdfs namenode -format

依次执行start-all.sh启动hadoop和hbase

第一次启动会暂停一次,输出yes回车继续

hadoop@hadoop:~$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [localhost]

localhost: starting namenode, logging to /home/hadoop/hadoop/hadoop/logs/hadoop-hadoop-namenode-hadoop.out

localhost: starting datanode, logging to /home/hadoop/hadoop/hadoop/logs/hadoop-hadoop-datanode-hadoop.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop/hadoop/hadoop/logs/hadoop-hadoop-secondarynamenode-hadoop.out

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/hadoop/hadoop/logs/yarn-hadoop-resourcemanager-hadoop.out

localhost: starting nodemanager, logging to /home/hadoop/hadoop/hadoop/logs/yarn-hadoop-nodemanager-hadoop.out

hadoop@hadoop:~$ start-hbase.sh

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop/hbase/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop/hbase/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

localhost: running zookeeper, logging to /home/hadoop/hadoop/hbase/bin/../logs/hbase-hadoop-zookeeper-hadoop.out

running master, logging to /home/hadoop/hadoop/hbase/logs/hbase-hadoop-master-hadoop.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop/hbase/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

: running regionserver, logging to /home/hadoop/hadoop/hbase/logs/hbase-hadoop-regionserver-hadoop.out

: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

: SLF4J: Class path contains multiple SLF4J bindings.

: SLF4J: Found binding in [jar:file:/home/hadoop/hadoop/hbase/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

: SLF4J: Found binding in [jar:file:/home/hadoop/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

: SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]输入jps查看所有服务是否正常启动

hadoop@hadoop:~$ jps

9156 HMaster

7908 NameNode

8629 NodeManager

8053 DataNode

9639 Jps

9276 HRegionServer

8397 ResourceManager

9087 HQuorumPeer

8239 SecondaryNameNode如果出现九个一样的进程,说明启动成功

访问http://localhost:50070查看hadoop

访问http://localhost:8088/cluster查看hdfs

访问 http://localhost:16010/master-status查看hbase

redis安装

直接执行sudo apt-get install redis-server 即可

验证

hadoop@hadoop:~$ redis-cli

127.0.0.1:6379> mongodb安装

转自csdnhttps://blog.csdn.net/yutu75/article/details/110941936

# 安装依赖包

sudo apt-get install libcurl4 openssl

# 关闭和卸载原有的mongodb

service mongodb stop

sudo apt-get remove mongodb

# 导入包管理系统使用的公钥

wget -qO - https://www.mongodb.org/static/pgp/server-4.4.asc | sudo apt-key add -

# 如果命令执行结果没有显示OK,则执行此命令在把上一句重新执行:sudo apt-get install gnupg

# 注册mongodb源

echo "deb [ arch=amd64,arm64 ] https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.4 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.4.list

# 更新源

sudo apt-get update

# 安装mongodb

sudo apt-get install -y mongodb-org=4.4.2 mongodb-org-server=4.4.2 mongodb-org-shell=4.4.2 mongodb-org-mongos=4.4.2 mongodb-org-tools=4.4.2

# 安装过程中如果提示: mongodb-org-tools : 依赖: mongodb-database-tools 但是它将不会被安装

# 终端下运行以下命令,解决:

# sudo apt-get autoremove mongodb-org-mongos mongodb-org-tools mongodb-org

# sudo apt-get install -y mongodb-org=4.4.2

# 创建数据存储目录

sudo mkdir -p /data/db启动与验证

hadoop@hadoop:~$ sudo systemctl start mongod.service

hadoop@hadoop:~$ sudo systemctl status mongod.service

* mongod.service - MongoDB Database Server

Loaded: loaded (/lib/systemd/system/mongod.service; disabled; vendor preset: enabled)

Active: active (running) since Sat 2024-05-25 12:09:56 UTC; 3s ago

Docs: https://docs.mongodb.org/manual

Main PID: 6598 (mongod)

Memory: 155.6M

CPU: 932ms

CGroup: /system.slice/mongod.service

`-6598 /usr/bin/mongod --config /etc/mongod.conf

May 25 12:09:56 hadoop systemd[1]: Started MongoDB Database Server.遇到的问题

web界面创建文件夹提示权限

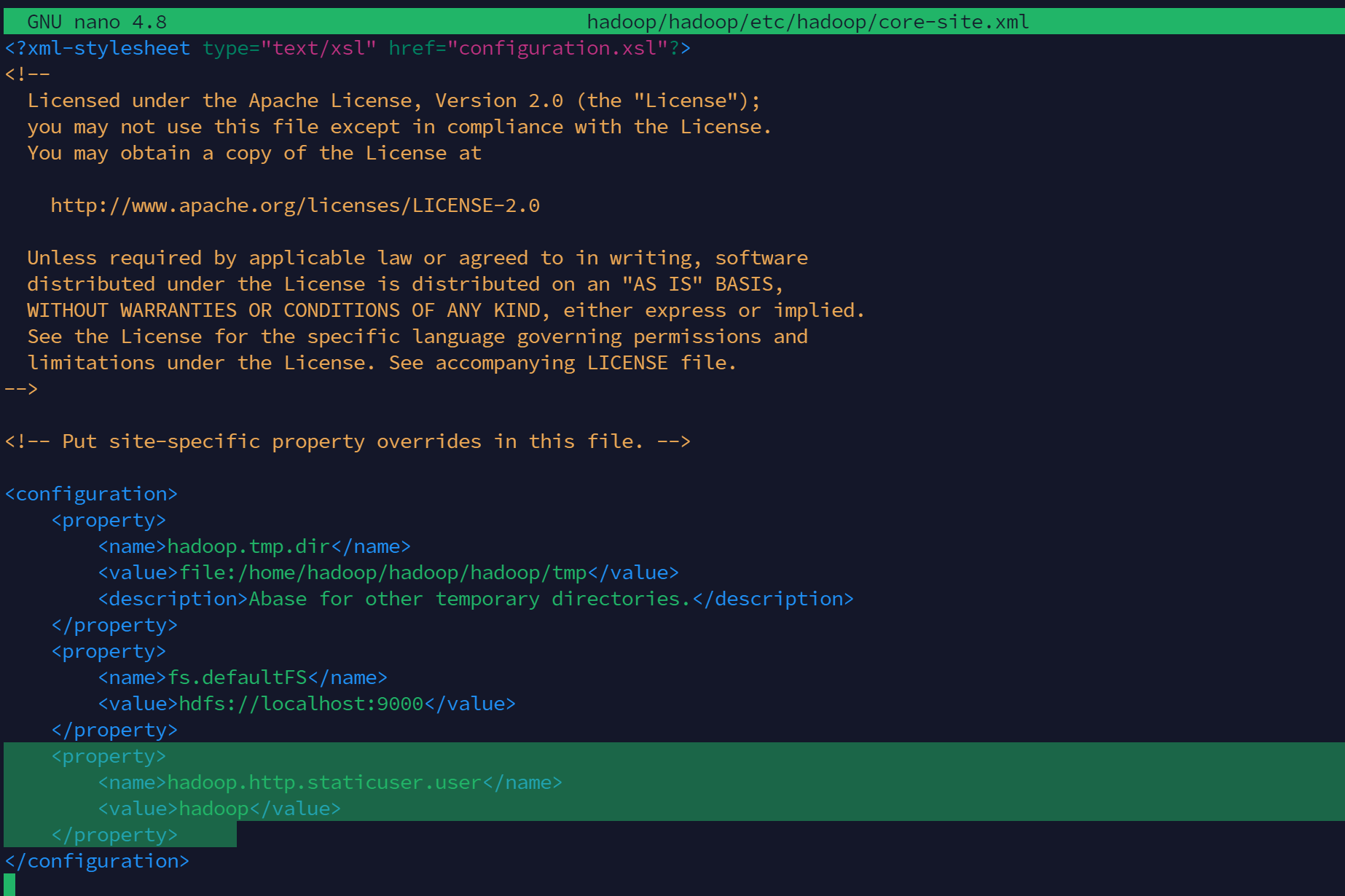

Permission denied: user=dr.who, access=WRITE, inode="/":hadoop:supergroup:drwxrwxr-x用户名有问题,需要在配置文件中指定正确的用户,此教程中使用的是hadoop用户,需要在core-site.xml增加

<property>

<name>hadoop.http.staticuser.user</name>

<value>hadoop</value>

</property>例如

上传文件提示Couldn’t upload the file xxxx.yyy

搜索教程在hdfs-site.xml 配置文件中添加

<property>

<name>dfs.permissions</name>

<value>true</value>

</property>ture代表测试模式,任何用户都能上传文件

重启hadoop,发现问题依旧,只能自行研究

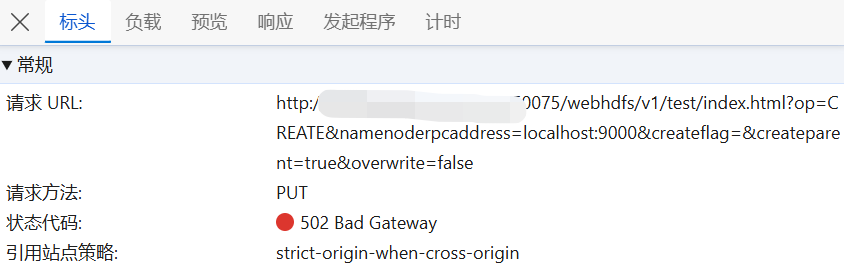

怀疑是目录权限问题,使用hdfs dfs -chmod 777 /testdir设置777权限仍然不行,检查日志cat ~/hadoop/hadoop/logs/hadoop-hadoop-datanode-hadoop.log发现

2024-05-27 14:22:26,231 INFO datanode.webhdfs: 127.0.0.1 OPTIONS /webhdfs/v1/test/index.html?op=CREATE&namenoderpcaddress=localhost:9000&createflag=&createparent=true&overwrite=false 500似乎是网络问题

打开浏览器控制台检查,提示502

网关有问题,打码区域是域名,突然想到有可能是clash的问题,设置白名单后成功解决问题!

网关有问题,打码区域是域名,突然想到有可能是clash的问题,设置白名单后成功解决问题!